The moment I realized my friend was wrong about ChatGPT (or Claude or Gemini ...)

It's not a better Google search, it's something completely different, and that matters more than you think

We shape our tools; thereafter they shape us." - Marshall McLuhan

You're about to see a flood of exciting announcements. OpenAI will trumpet new ChatGPT models, Google will dazzle us with Gemini updates, and Anthropic just released Claude 4.0. The promise is always the same: AI will do amazing things for you, faster and better than ever before.

But before we get swept up in the excitement, I think it's worth stepping back and asking a more fundamental question: what's happening inside this software when you type a question and get an answer? Because it's not a person responding to you. There are no emotions in there, no lived experience. It's just software doing something rather remarkable that we've never quite seen before.

For the rest of this article, assume AI = LLM = Chatbox = ChatGPT = Claude = Gemini. Yes, there are differences, but for the sake of understanding what AI does for you, let’s keep it simple.

The long arc of finding things

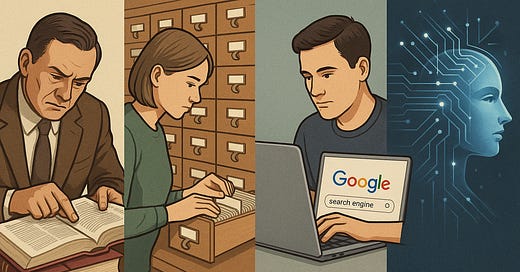

Think about how we've searched for information over the decades. We went from flipping through encyclopedia indexes to thumbing through library card catalogs to typing keywords into Google. Each step made finding things faster and easier, but the basic process stayed the same: you'd locate a source, then read it yourself.

These new generative AI tools represent something fundamentally different. They're not just finding a book or an article or giving you the quick answer that Taipei is the capital of Taiwan. They're more like someone who, working at the speed of light, connects ideas between everything they've ever read and synthesizes an answer that has never existed before. It can assemble text for an entire story on any topic, and each time you ask, the story might be different.

Last week, I told a longtime friend how I use ChatGPT for research. I referred to AI as a portal to knowledge. Waving his coffee mug like a conductor's baton, he jumped into our conversation with his understanding of AI. "It's like having Google, but way better," he said, his voice brimming with eagerness as he swirled the mug in wide arcs. "You just ask it stuff in normal English instead of trying to guess the right keywords."

I nodded along, but something nagged at me. His description felt both perfectly right and completely wrong at the same time. It's the kind of misconception that makes total sense until you peek behind the curtain and realize the magic trick is more interesting than you imagined.

The illusion of search

When you type a question into Claude or ChatGPT, it feels exactly like searching. You ask, it answers. But calling it "search" is like calling a jazz musician a jukebox because they both make music when you request a song.

Here's what happens behind the scenes when you query Google Search: its bots are constantly crawling the web, like tireless librarians cataloging every page they find. When you search for "best pizza 🍕 in Austin," Google doesn't create an answer. It rummages through its vast filing system and hands you a ranked list of pages that mention pizza and Austin, probably with some ads mixed in.

Large language models (AI) work completely differently. They're not looking anything up in real time. Instead, they're more like that friend who reads everything available on the internet up until a certain point and then got their head frozen in time.

When you ask Claude or ChatGPT or Gemini about pizza, it's not consulting some cosmic auto-lookup database of pages. It's generating an answer word by word, predicting what should come next based on patterns it learned from all that text it ingested during training.

This is why ChatGPT, Claude, or Gemini can write you a sonnet about pizza or explain quantum physics in the voice of a pirate…things that never existed in its training data. It's also why it sometimes informs you about restaurants that closed years ago or events that never happened. It's not lying; it's weaving words into patterns, like a jazz musician riffing on a familiar chord progression.

The natural language trick

My friend wasn't entirely wrong about the natural language part, but his timing missed the mark. Google has understood plain English queries for years. You don't need to type "Austin + pizza + best" like some kind of digital telegram.

The real difference is what happens after you ask. With Google, you get a list of links and you do the work of reading, evaluating, and synthesizing. With an LLM, you get what feels like a personalized answer delivered in complete sentences, as if you'd asked a knowledgeable friend rather than a filing cabinet.

This conversational style flows so naturally that it veils what’s happening. When your favorite LLM explains something, it isn’t retrieving facts from a database but stitching together fragments from its training into coherent and helpful text. Sometimes this yields remarkably insightful explanations, and other times it produces authoritative nonsense. The challenge is that both can feel equally convincing in the moment.

When search and AI collide

The plot thickens when you realize that most AI systems you interact with today don't choose searching over generating answers. They do both.

This hybrid approach works like this: when you ask a question, the system first runs a search through carefully curated databases or even the live web to find relevant information. Then it feeds those search results to the language model and says, essentially, "Here's some current information about what the human asked. Now write a helpful response based on this." It generates a response by assembling bits of sentences it predicts as correct for your topic. Remember, its memory bank rivals the immensity of knowledge in the Library of Congress.

Imagine a lightning-fast research team that gathers sources and synthesizes them into coherent responses in seconds. This combination helps reduce those confident hallucinations while maintaining the conversational flow that makes LLMs so appealing.

Why this matters

Understanding the difference between search and generation isn't just technical hairsplitting. It changes how you should approach these tools and what kind of skepticism to bring to the interaction.

When Google gives you a list of links, you instinctively know to check sources and compare perspectives. The list format itself reminds you that you're looking at multiple viewpoints that might conflict.

When your LLM gives you a polished paragraph explaining something, it's easy to forget that this confident-sounding explanation was generated, not retrieved. There's no obvious way to "check the sources" because the answer is a blend of countless texts, processed through statistical patterns in ways that even Claude's creators don't fully understand.

At present, some LLMs do provide citations and reference links but the point is, always double check even those. Over time, you learn to detect when an LLM is veering off course in its responses. A worthwhile habit to cultivate. This isn't a criticism of LLMs. They're invaluable for learning, writing, and problem-solving, but they perform best when you understand they generate plausible text from learned patterns rather than retrieve verified facts.

I've started thinking of my interactions with ChatGPT less like consulting an encyclopedia and more like having a conversation with an extremely well-read friend who has perfect recall but misremembers details. That friend might help me understand a complex concept or see a problem from a new angle, but I wouldn't bet my life savings on their stock tips without double-checking. It’s my responsibility to bring discernment to my thought process.

The future of finding things

The lines between search and generation will probably keep blurring. Google is already experimenting with AI-generated summaries at the top of search results. Meanwhile, AI chatbots are getting better at citing sources and acknowledging uncertainty.

What won't change is the need for us lifelong learners to understand what's happening under the hood. Whether you're using these tools to explore a new hobby, understand current events, or solve problems at work, knowing the difference between generation and retrieval helps you ask better questions and interpret answers more wisely.

So the next time someone describes ChatGPT as "search but better," you can nod knowingly and maybe share the fuller picture. After all, the real magic isn't in the illusion of search. It's in understanding how these tools think differently and using that knowledge to think better for ourselves.

The future of learning isn't just about having AI answer our questions. It's about learning to ask the right questions of the right tools, and knowing when to trust the answers we get. And when you get answers, take a beat, sit back, and relax to give some good old-fashioned human thought to what you learned. Time for a nap.

Thank you for your contribution of time and attention as a reader. I’ve received many lovely notes and thoughtful insights. A special thank you to those who have so generously contributed financially to AI for Lifelong Learners. What you do makes a difference and keeps me inspired.

Oh, well said Sandy. Thank you for this. I've found using Projects helpful in the same way but I had not articulated it quite as well as you just did. I've been using the Project feature in ChatGPT and Claude and Gemini (called GEMs). They are all pretty much the same with various limitations in the number and size of files you can give a Project.

Related to this is using Google NotebookLM. I have over 100 Notebooks, each for a specific purpose (or Project). It's an amazing product. I need to write another piece about NotebookLM because they have added new features.

When u ask someone to research a topic, you have to use judgement to measure the validity of their answer. You also have to frame their research, namely to give context for their research. Its the same with ChatGPT. I think "Projects" in ChatGPT are game changing. When you give the right context the search results are awesome.