Compression Is the Point

A 2026 refresher on what your AI is really doing when it answers you

As we head into 2026, AI is becoming less of a novelty and more like electricity. It’s just there: built into the tools we use, the workflows we rely on, and the decisions we make without noticing we’re making them.

That makes it a good moment to revisit a few basics. Not because you need to memorize terms, but because the right mental model changes how you use the tool. One of those basics is a term that appears everywhere in AI discussions, often without much explanation: compression.

Here’s the part that stopped me for a beat when it really landed. When you talk to an AI like ChatGPT or Claude, you’re not talking to something that has read and remembered everything. That’s impossible. Instead, you’re talking to something that has compressed an enormous portion of what we’ve written down. And that one fact explains a lot about both the benefits and the limits.

What compression actually means

Imagine you’re a student facing a final exam on all of English literature. Every novel, every poem, every essay ever written. You can’t memorize it all, so you do what any sensible person would do: you look for patterns.

You notice that tragic heroes tend to have a fatal flaw. You notice that love poems often reach for weather and seasons. You notice how often English sentences behave: subject, then verb, then whatever trouble comes next.

You’re not memorizing the texts. You’re extracting the regularities, the recurring moves, the habits of language and story.

That’s compression. And it’s exactly what these AI models do, just at a scale that’s hard to hold in the mind. They process trillions of words and squeeze them down into mathematical patterns compact enough to run on a server, and now often on a phone.

However you feel about the consequences, the feat itself is real. It deserves respect.

What gets lost

But compression, by definition, is a process of leaving things out.

When you compress a photograph, you lose fine grain. When you compress a song into an MP3, audiophiles will tell you something hard to name disappears. And when you compress the sum of human expression into a predictive model, you start to notice what’s missing by what keeps showing up. You know this from seeing grainy JPG images. That’s compression.

One of my favorite authors, Sven Birkerts, has spent decades thinking about the trade: depth exchanged for convenience, skimming for reading, information for knowledge. AI compression can feel like the culmination of that drift. We get a system that can talk about almost anything, but the talk tends to come from the most common patterns, the well-worn paths, the phrases that offend the fewest people in the room.

If you’ve ever asked a vague question and gotten back a paragraph of calmly competent mush, you’ve touched the edge of the problem.

The model isn’t trying to give you the right answer. It’s trying to provide you with the likely answer, the one that would be least surprising given what it has seen.

Not thinking, but something

I want to be careful here, because it’s tempting to be glib. To say the AI is “just” a statistical machine, “just” pattern-matching, “just” autocomplete with better manners.

I don’t think that’s quite fair.

When I watch my grandchildren learn to speak, they’re also, in a sense, compressing. They listen, they infer, they predict. They try a sentence, watch what happens, adjust. But they’re doing it with bodies, and stakes, and a world that pushes back. They care about getting it right because getting it right is tied to love and belonging and safety.

The AI has no such stakes. It’s not trying to impress you, protect itself, or figure out who it is. It’s simply returning the most probable next word, over and over, until a response emerges.

That’s not nothing. It can be surprisingly useful. But it’s not the same as thinking. And it’s definitely not the same as wisdom.

What this asks of you

So what do you do with this understanding?

First, you hold it lightly. Then you use it deliberately.

One practical rule: hold your first interpretation lightly. With AI, fixed beliefs become blinders.

(A quick aside I can’t resist: do you know why angels can fly? Because they hold their beliefs lightly.)

What I mean is not “believe nothing.” It’s stay agile. With AI it’s easy to slip into a fixed story: it’s brilliant, it’s useless, it’s dangerous, it’s just autocomplete. Any one of those can become a way of not looking closely. A better stance is provisional: treat outputs as hypotheses, test them against your context, and revise fast.

If you give the AI vague directions, it will take you somewhere average. That’s not the model failing. It’s the nature of compression. It learned from everything, including the enormous volume of hedging, noncommittal prose we humans produce. Without your guidance, it defaults to the well-traveled route.

That “average” isn’t always a problem. Sometimes you want the reliable, standard take. But when you need originality, edge cases, or a less-traveled line of thought, you have to ask for it. I wrote a practical guide on that earlier in AIFLLL, including prompt patterns that deliberately nudge the model away from the default: “Prompting your AI to think outside the box”

Here’s the hopeful part: you can supply what compression threw away. Your actual situation. Your values. The texture of your life, the constraints you’re living inside, the tradeoffs you’re willing to make. Your lived experience. No model can infer that cleanly. It has to be given.

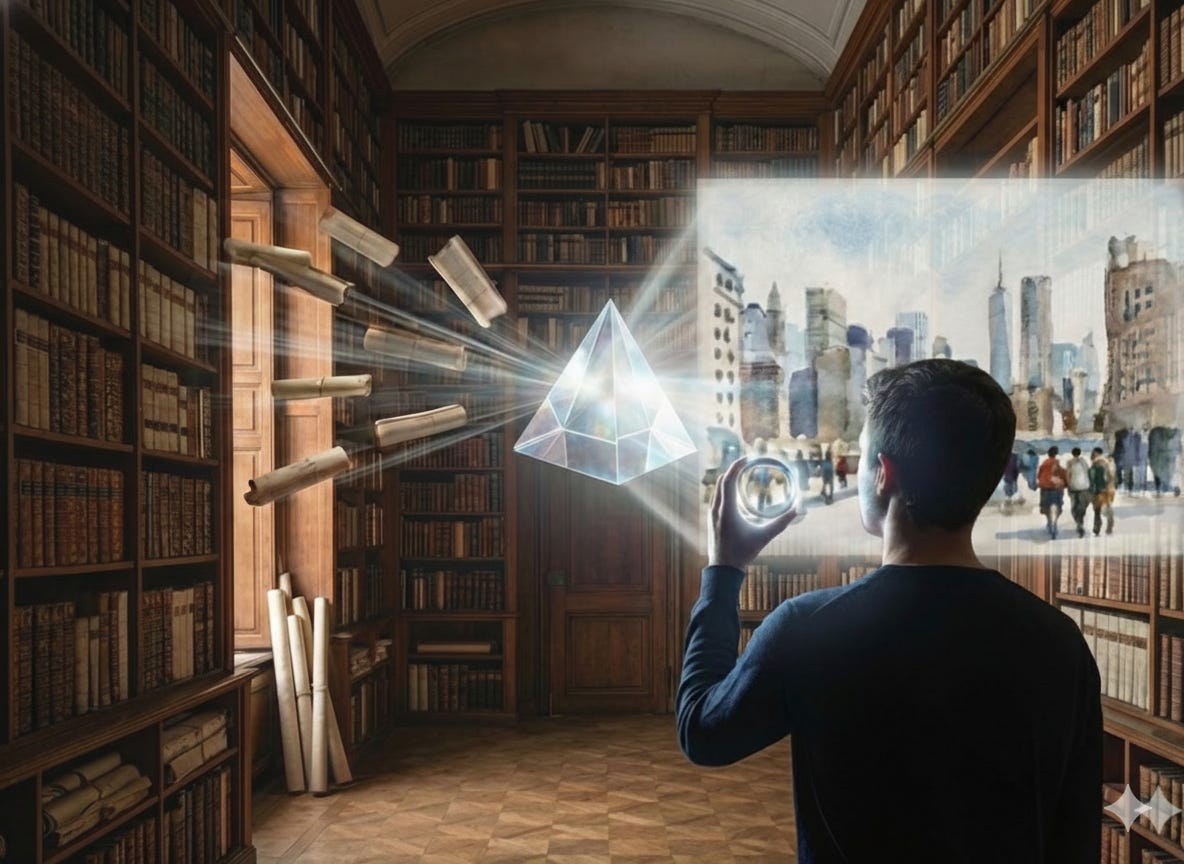

This perspective is the shift I keep returning to in this newsletter. Using AI well in 2026 isn’t primarily about finding information. We mostly solved that years ago. It’s closer to moving from being a librarian to being a director.

In the old world, your job was to locate the right book. In the new world, you aren’t doing the acting or the lighting. The AI can handle a lot of the production work. But the final result still depends on your ability to set the vision and provide the script.

The script is context plus constraints. The vision is yours.

Closing thought

AI is a tool, and like any tool, it reflects back what you bring to it. It’s a compressed map of human expression: astonishingly detailed in some ways, strangely flat in others. A map can help you move. It can’t tell you what matters.

So bring better inputs than “give me a summary.” Bring your best questions. Bring your real confusion. Bring the life you’re living right now, with all its particularity.

The shortcut is never the territory. But if you remember that going in, it can still take you somewhere worth going.

T

—-

One of my trusted sources for AI is TheWhiteBox “AI broken down into first principles, providing key insights in a language anyone can understand.” I read it weekly, and he offers a free plan. I am not affiliated with him.

Really appreciated this piece, Tom. Thanks. Hope you and Donna have a restful and fun holiday season.